1. Define vector space.Give example.

A vector space is a set V on which two operations + and · are defined, called vector addition and scalar multiplication.

The operation + (vector addition) must satisfy the following conditions:

-

Closure:

If u and v are any vectors in V, then the sum

u + v

belongs to V.

- (1)

Commutative law:

For all vectors u and v in V,

u + v = v + u

- (2)

Associative law:

For all vectors u, v, w in V,

u + (v + w)

= (u + v) + w

- (3)

Additive identity:

The set V contains an additive identity element,

denoted by 0,

such that for any vector v in V,

0 + v = v

and

v + 0 = v.

- (4) Additive inverses: For each vector v in V, the equations v + x = 0 and x + v = 0 have a solution x in V, called an additive inverse of v, and denoted by - v.

The operation · (scalar multiplication) is defined between real numbers (or scalars) and vectors, and must satisfy the following conditions:

-

Closure:

If v in any vector in V,

and c is any real number, then the product

c · v

belongs to V.

- (5)

Distributive law:

For all real numbers c and all vectors

u, v in V,

c · (u + v)

= c · u

+ c · v

- (6)

Distributive law:

For all real numbers c, d and all vectors v in V,

(c+d) · v

= c · v

+ d · v

- (7)

Associative law:

For all real numbers c,d and all vectors v in V,

c ·

(d · v)

= (cd) · v

- (8) Unitary law: For all vectors v in V, 1 · v = v

- The simplest example of a vector space is the trivial one: {0}, which contains only the zero vector (see the third axiom in the Vector space article). Both vector addition and scalar multiplication are trivial. A basis for this vector space is the empty set, so that {0} is the 0-dimensional vector space over F.

2. State and prove Bayes Theorem

Bayes’ Theorem describes the probability of occurrence of an event

related to any condition. It is also considered for the case of

conditional probability.

Bayes Theorem Statement

Let E1, E2,…, En be a set of events associated with a sample space S, where all the events E1, E2,…, En have nonzero probability of occurrence and they form a partition of S. Let A be any event associated with S, then according to Bayes theorem,

Bayes Theorem Proof

According to the conditional probability formula,

Using the multiplication rule of probability,

Using total probability theorem,

Putting the values from equations (2) and (3) in equation 1, we get

Note:

The following terminologies are also used when the Bayes theorem is applied:

Hypotheses: The events E1, E2,… En is called the hypotheses

Prior Probability: The probability P(Ei) is considered as the prior probability of hypothesis Ei

Posterior Probability: The probability P(Ei|A) is considered as the posterior probability of hypothesis Ei

Bayes’ theorem is also called the formula for the probability of “causes”. Since the Ei‘s are a partition of the sample space S, one and only one of the events Ei occurs (i.e. one of the events Ei must occur and the only one can occur). Hence, the above formula gives us the probability of a particular Ei (i.e. a “Cause”), given that the event A has occurred.

Bayes Theorem Formula

If A and B are two events, then the formula for Bayes theorem is given by:

P(A|B) = P(A∩B)/P(B) |

Where P(A|B) is the probability of condition when event A is occurring while event B has already occurred.

P(A ∩ B) is the probability of event A and event B

P(B) is the probability of event B

3. State and prove Chapman- Kolmogorov Equations

Definition: The n-step transition probability that a process currently in state i will be in state j after n additional transitions.

By using the Markov property and the law of total probability, we realize that

Pij(t+s)=r∑k=0Pik(t)Pkj(s)for all i,j∈ X,t,s>0

These equations are known as the Chapman-Kolmogorov equations. The equations may be writt en in matrix terms as

P(t+s)=P(t)·P(s)

4. Derive Birth n Death Process

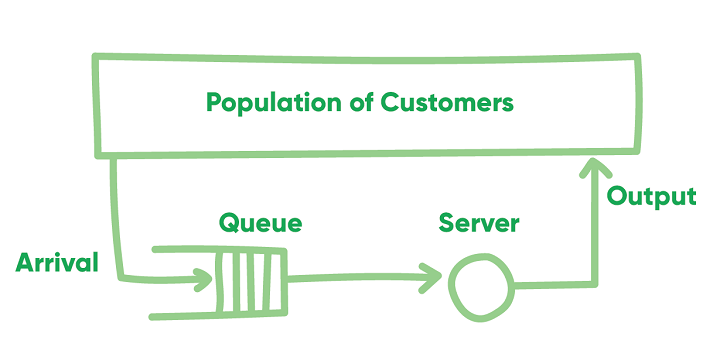

The birth–death process (or birth-and-death process) is a special case of continuous-time Markov process where the state transitions are of only two types: "births", which increase the state variable by one and "deaths", which decrease the state by one. T

Birth–death processes have many applications in demography, queueing theory, performance engineering, epidemiology, biology and other areas.

When a birth occurs, the process goes from state n to n + 1. When a death occurs, the process goes from state n to state n − 1. The process is specified by birth rates and death rates .

5. State Pollaczek–Khinchine formula

The Pollaczek–Khinchine formula states a relationship between the queue length and service time distribution Laplace transforms for an M/G/1 queue (where jobs arrive according to a Poisson process and have general service time distribution). The term is also used to refer to the relationships between the mean queue length and mean waiting/service time in such a model.[1]

6. Define Markov Chain

A stochastic process has the Markov property if the conditional probability distribution

of future states of the process (conditional on both past and present

values) depends only upon the present state; that is, given the present,

the future does not depend on the past. A process with this property is

said to be Markovian or a Markov process. The most famous Markov process is a Markov chain. Brownian motion is another well-known Markov process.

Markov property refers to the memoryless property of a stochastic process. That is, (the probability of) future actions are not dependent upon the steps that led up to the present state.

A Markov chain is a stochastic model describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event.

Markov chains have many applications as statistical models of real-world processes, such as studying cruise control systems in motor vehicles, queues or lines of customers arriving at an airport, currency exchange rates and animal population dynamics.

Ergodicity

A state i is said to be ergodic if it is aperiodic and positive recurrent.