Dijkstra's Algorithm

Dijkstra's algorithm allows us to find the shortest path between any two vertices of a graph

Dijkstra's Algorithm, which uses a priority queue to keep track of the

vertices that have been visited and their current distances from the source.

One way to implement this priority queue is as a Fibonacci heap.

Algorithm

Let the distance of vertex Y be the distance from the source vertex to Y.

Dijkstra's algorithm will assign some initial distance values and will try

to improve them step by step.

- Mark all vertices unvisited. Create a set of all the unvisited vertices

called the unvisited set.

- Assign to every vertex a tentative distance value: set it to zero for

our source vertex and to infinity for all other vertices. Set the

source vertex as current.

- For the current vertex, consider all of its unvisited neighbors and

calculate their tentative distances through the current vertex by adding

the weight of the edge connecting the current vertex and neighbor to

the current vertex's assigned value.

Compare the newly calculated tentative distance to the current

assigned value and assign the smaller one.

- When we are done considering all of the unvisited neighbors of the

current vertex, mark the current vertex as visited and remove it from

the unvisited set. A visited vertex will never be checked again.

- Once all reachable vertices are marked, then stop.

- Otherwise, select the unvisited vertex that is marked with the

smallest tentative distance, set it as the new "current vertex", and

go back to step 3.

Fibonacci Heaps

Fibonacci heaps are sometimes referred to as “lazy” data structures

because their structure is less strictly managed as they perform

operations than in many other data structures. But this “laziness” allows

them to have extremely fast amortized time complexities for common heap

operations.

| Operation |

Time Complexity |

insert() |

O(1) |

reportMin() |

O(1) |

deleteMin() |

O(log n) amortized |

delete() |

O(log n) amortized |

decreaseKey() |

O(1) amortized |

merge() |

O(1) |

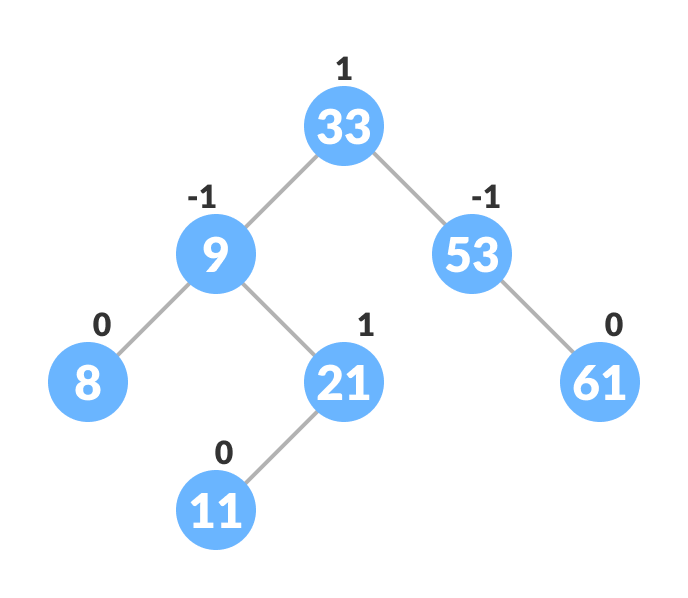

Fibonacci heaps are implemented using a circular and doubly-linked root list

of heap-ordered trees in which each node contains a pointer to its parent

and to one of its children. The children of each node are doubly-linked in

a circular linked list. There is always a pointer to the node with the minimum key

in the heap. It is this high connectivity between nodes that allows O(1)

time insertion, minimum-reporting, key-decreasing, and merging.

Putting Them Together

Dijkstra's original shortest path algorithm does not use a priority queue,

and runs in O(V2) time. When using

a Fibonacci heap as a priority queue, it runs in O(E + V log V)

time, which is asymptotically the fastest known time complexity for this problem.

However, due to their programming complexity, and for some practical purposes,

Fibonacci heaps are not always a necessary or significant improvement.

Fibonacci heaps are best reserved for applications in which the number of deletions

is small compared to the use of other operations. In further iterations of this

demo, the efficacy of different priority queue implementations could

be tested with timing tests for graphs of different sizes and properties.

For now, animation overhead erases any improvements made by the Fibonacci heap,

and this demo solely shows a visualization of the two structures in action.