- Developed in 1995 by James Kennedy and Russ Eberhart.

- It was inspired by social behavior of bird flocking or fish schooling.

- PSO applies the concept of social interaction to problem solving.

- PSO is a computational method that optimizes a problem by iteratively trying to improve a candidate solution with regard to a given measure of quality.

- PSO is a metaheuristic as it makes few or no assumptions about the problem being optimized and can search very large spaces of candidate solutions.

- Also, PSO does not use the gradient of the problem being optimized, which means PSO does not require that the optimization problem be differentiable as is required by classic optimization methods such as gradient descent and quasi-newton methods.

- However, metaheuristics such as PSO do not guarantee an optimal solution is ever found.

- PSO is a robust optimization technique that applies the social intelligence of swarms to solve optimization problems.

- Bird flocking and fish schooling are the example of swarm intelligence. In bird flocking, each swarm update its position by following collective intelligence of the group and its own intelligence.

- First version of this algorithm was proposed by J. Kennedy, R. Eberhart.In this version, a set of potential solutions (called as particles), are dispersed at various points in the solution space. Each point has some objective function value, and thus has fitness, for its current location. Each particle’s movement is influenced by the following two factors:

- The best fitness achieved by the particle so far. (BFS)

- The best fitness achieved by any particle in the neighbourhood. (BFN)

Vi=ω∗Vi+c1∗r1∗(Pi Xi)+c2∗r2∗(GXi) (1)

Xi=Xi+Vi (2)#Bird Flocking

A flock is a gathering of a group of same species animals in order to forage or travel with one another. In avians flocks are typically seen in association with migration. While this is true it can also be seen that flocking is important in safety from predation and foraging benefits.

Flocking is the behavior exhibited when a group of birds, called a flock, are foraging or in flight.

From the perspective of the mathematical modeller, "flocking" is the collective motion by a group of self-propelled entities and is a collective animal behavior exhibited by many living beings such as birds, fish, bacteria, and insects. It is considered an emergent behavior arising from simple rules that are followed by individuals and does not involve any central coordination.

In 1986, Craig Reynolds described this process in 3 simple behaviors:

- Separation- avoid crowding local flockmates

- Alignment- move towards the average heading of local flockmates

- Cohesion- move toward the average position of local flockmates

#Fish Schooling

A school is a group of fish swimming in a synchronizing and polarized way. The individuals of this group adopt a schooling behavior (Pitcher, 1983). Schooling behaviors are characterized by the tendency to polarize, with individuals adopting the same orientation and swimming in the same direction

• Fish schooling and aggregation behaviors are some of the most prominent social and group activities exhibited by fishes.

• Fish may school or form aggregations for many reasons, including foraging, reproduction, and defense from predators.

•One of the most enduring hypotheses regarding fish schooling is that fish in schools can obtain a hydrodynamic advantage, thus reducing the cost of locomotion, by taking advantage of the wakes shed by neighbors within the school.

Terminologies in PSO

•Collection of flying particles (swarm) - Changing solutions

• Search area - Possible solutions

•Movement towards a promising area to get the global optimum

•Each particle keeps track:

its best solution, personal best, pbest or lbest

the best value of any particle, global best, gbest

- Each particle keeps track of its coordinates in the solution space which are associated with the best solution (fitness) that has achieved so far by that particle. This value is called personal best , pbest.

- Another best value that is tracked by the PSO is the best value obtained so far by any particle in the neighborhood of that particle. This value is called gbest.

- The basic concept of PSO lies in accelerating each particle toward its pbest and the gbest locations, with a random weighted accelaration at each time step.

Working

It solves a problem by having a population of candidate solutions, here dubbed particles, and moving these particles around in the search-space according to simple mathematical formula over the particle's position and velocity.

Each particle's movement is influenced by its local best known position, but is also guided toward the best known positions in the search-space, which are updated as better positions are found by other particles. This is expected to move the swarm toward the best solutions.

Particles Velocity

1. Inertia: Makes the particle move in the same direction and with the same velocity

2. Personal Influence: Improves the individual, Makes the particle return to a previous

position, better than the current, Conservative

3. Social Influence: Makes the particle follow the best neighbors direction

Intensification: explores the previous solutions, finds the best solution of a given region

Diversification: searches new solutions, finds the regions with potentially the best solutions

Evolution

PSO is originally attributed to Kennedy, Eberhart and Shi and was first intended for simulating social behaviour, as a stylized representation of the movement of organisms in a bird flock or fish school. The algorithm was simplified and it was observed to be performing optimization. The book by Kennedy and Eberhart describes many philosophical aspects of PSO and swarm intelligence. An extensive survey of PSO applications is made by Poli. Recently, a comprehensive review on theoretical and experimental works on PSO has been published by Bonyadi and Michalewicz.

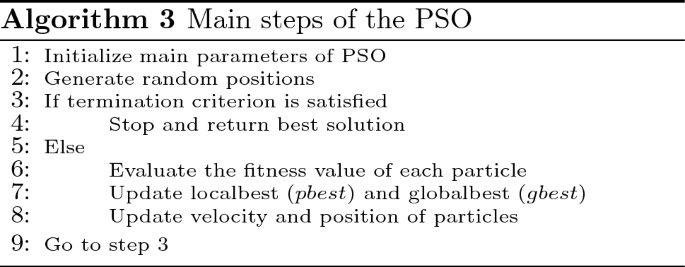

The basic PSO parameters are swarm size or number of

particles, number of iterations, velocity components, and acceleration coefficients

illustrated bellow. In addition, PSO is also influenced by inertia weight, velocity

clamping, and velocity constriction

Neighborhood Topologies

A neighborhood must be defined for each particle [7]. This neighborhood

determines the extent of social interaction within the swarm and influences a

particular particle’s movement. Less interaction occurs when the neighborhoods in

the swarm are small [4]. For small neighborhood, the convergence will be slower

but it may improve the quality of solutions. For larger neighborhood, the

convergence will be faster but the risk that sometimes convergence occurs earlier

[7]. To solve this problem, the search process starts with small neighborhoods size

and then the small neighborhoods size is increased over time. This technique

ensures an initially high diversity with faster convergence as the particles move

towards a promising search region [4].

The PSO algorithm is social interaction among the particles in the entire swarm.

Particles communicate with one another by exchanging information about the

success of each particle in the swarm. When a particle in the whole swarm finds a

better position, all particles move towards this particle. This performance of the

particles is determined by the particles’ neighborhood [4]. Researchers have

worked on developing this performance by designing different types of

neighborhood structures [15]. Some neighborhood structures or topologies are

discussed below:

Neighbourhoods and topologies

- The topology of the swarm defines the subset of particles with which each particle can exchange information.

- The basic version of the algorithm uses the global topology as the swarm communication structure. This topology allows all particles to communicate with all the other particles, thus the whole swarm share the same best position g from a single particle. However, this approach might lead the swarm to be trapped into a local minimum, thus different topologies have been used to control the flow of information among particles.

- For instance, in local topologies, particles only share information with a subset of particles. This subset can be a geometrical one – for example "the m nearest particles" – or, more often, a social one, i.e. a set of particles that is not depending on any distance.

- In such cases, the PSO variant is said to be local best (vs global best for the basic PSO).

A commonly used swarm topology is the ring, in which each particle has just two neighbours, but there are many others. The topology is not necessarily static. In fact, since the topology is related to the diversity of communication of the particles, some efforts have been done to create adaptive topologies (SPSO, APSO, stochastic star, TRIBES, Cyber Swarm, and C-PSO).

Inshort,

Two different kinds of neighborhoods are defined for PSO:

1. In the gbest swarm, all the particles are neighbors of each other; thus, the position of the best overall particle in the swarm is used in the social term of the velocity update equation.

•It is assumed that gbest swarms converge fast, as all the particles are attracted simultaneously to the best part of the search space.

•However, if the global optimum is not close to the best particle, it may be impossible to the swarm to explore other areas; this means that the swarm can be trapped in local optima.

2. In the pbest / lbest swarm, only a specific number of particles (neighbor count) can affect the velocity of a given particle.

•The swarm will converge slower but can locate the global optimum with a greater chance.

In the lbest / pbest topology, a number of neighbors are selected from each side of the particle

Convergence

In relation to PSO the word convergence typically refers to two different definitions:

- Convergence of the sequence of solutions (aka, stability analysis, converging) in which all particles have converged to a point in the search-space, which may or may not be the optimum,

- Convergence to a local optimum where all personal bests p or, alternatively, the swarm's best known position g, approaches a local optimum of the problem, regardless of how the swarm behaves.

Convergence of the sequence of solutions has been investigated for PSO.[15][16][17] These analyses have resulted in guidelines for selecting PSO parameters that are believed to cause convergence to a point and prevent divergence of the swarm's particles (particles do not move unboundedly and will converge to somewhere). However, the analyses were criticized by Pedersen[22] for being oversimplified as they assume the swarm has only one particle, that it does not use stochastic variables and that the points of attraction, that is, the particle's best known position p and the swarm's best known position g, remain constant throughout the optimization process. However, it was shown[38] that these simplifications do not affect the boundaries found by these studies for parameter where the swarm is convergent. Considerable effort has been made in recent years to weaken the modelling assumption utilized during the stability analysis of PSO,[39] with the most recent generalized result applying to numerous PSO variants and utilized what was shown to be the minimal necessary modeling assumptions.[40]

Convergence to a local optimum has been analyzed for PSO in[41] and.[42] It has been proven that PSO needs some modification to guarantee finding a local optimum.

This means that determining convergence capabilities of different PSO algorithms and parameters still depends on empirical results. One attempt at addressing this issue is the development of an "orthogonal learning" strategy for an improved use of the information already existing in the relationship between p and g, so as to form a leading converging exemplar and to be effective with any PSO topology. The aims are to improve the performance of PSO overall, including faster global convergence, higher solution quality, and stronger robustness.[43] However, such studies do not provide theoretical evidence to actually prove their claims.

Comparison with other evolutionary computation techniques

• Unlike in genetic algorithms, evolutionary programming and evolutionary strategies, in PSO, there is no selection operation.

• All particles in PSO are kept as members of the population through the course of the run

• PSO is the only algorithm that does not implement the survival of the fittest.

• No crossover operation in PSO.

• eq 1resembles mutation in EP.

• In EP balance between the global and local search can be adjusted through the strategy parameter while in PSO the balance is achieved through the inertial weight factor (w) of eq. 1

Advantages & Disadvantages of PSO

Advantages of the PSO algorithm [14] [15]:

1) PSO algorithm is a derivative-free algorithm.

2) It is easy to implementation, so it can be applied both in scientific research

and engineering problems.

3) It has a limited number of parameters and the impact of parameters to the

solutions is small compared to other optimization techniques.

4) The calculation in PSO algorithm is very simple.

5) There are some techniques which ensure convergence and the optimum

value of the problem calculates easily within a short time.

6) PSO is less dependent of a set of initial points than other optimization

techniques.

7) It is conceptually very simple.

Disadvantages of the PSO algorithm [13]:

1) PSO algorithm suffers from the partial optimism, which degrades the

regulation of its speed and direction.

2) Problems with non-coordinate system (for instance, in the energy field)

exit.

Advantages

• Insensitive to scaling of design variables

• Simple implementation

• Easily parallelized for concurrent processing

• Derivative free

• Very few algorithm parameters

• Very efficient global search algorithm

Disadvantages

• Tendency to a fast and premature convergence in mid optimum points

• Slow convergence in refined search stage (weak local search ability)

https://slideplayer.com/amp/1528196/

Millonas proposed five basic principles (van den Bergh 2001):

(1) Proximity: the swarm should be able to carry out simple space and time computations.

(2) Quality: the swarm should be able to sense the quality change in the environment and response it.

(3) Diverse response: the swarm should not limit its way to get the resources in a narrow scope.

(4) Stability: the swarm should not change its behavior mode with every environmental change.

(5) Adaptability: the swarm should change its behavior mode when this change is worthy.

Explain proximity principle, stability principle and adaptability principle of swam intelligence.

Proximity principle: The basic units of a swarm should be capable of giving the respond back to to environmental variance triggered by interactions among agents. However, some fundamental behaviors are shared such as living-resource searching and nest-building.

Principle of stability: The population should not change its mode of behavior every time the environment changes.

Principle of adaptability: The swarm is sensitive to the changes in the environment that result in different swarm behaviour.