Biological Background, Schema, Theorem, GA Operators: Crossover, Mutation and Its Types-GA Algorithm, Variations Of GA: Adaptive GA and Real Coded GA

“Anyone who stops learning is old, whether at twenty or eighty. Anyone who keeps learning stays young. The greatest thing in life is to keep your mind young.” — Henry Ford

Biological Background, Schema, Theorem, GA Operators: Crossover, Mutation and Its Types-GA Algorithm, Variations Of GA: Adaptive GA and Real Coded GA

SA algorithm is one of the most preferred heuristic methods for solving optimization problems.

It is a probabilistic technique which is an effective and general form of optimization.

It is useful in finding global optima of a given function when there is lot of local optima.

“Annealing” (heat (metal or glass) and allow it to cool slowly, in order to remove internal stresses and toughen it) refers to an analogy with thermodynamics, specifically with the way that metals cool and anneal.

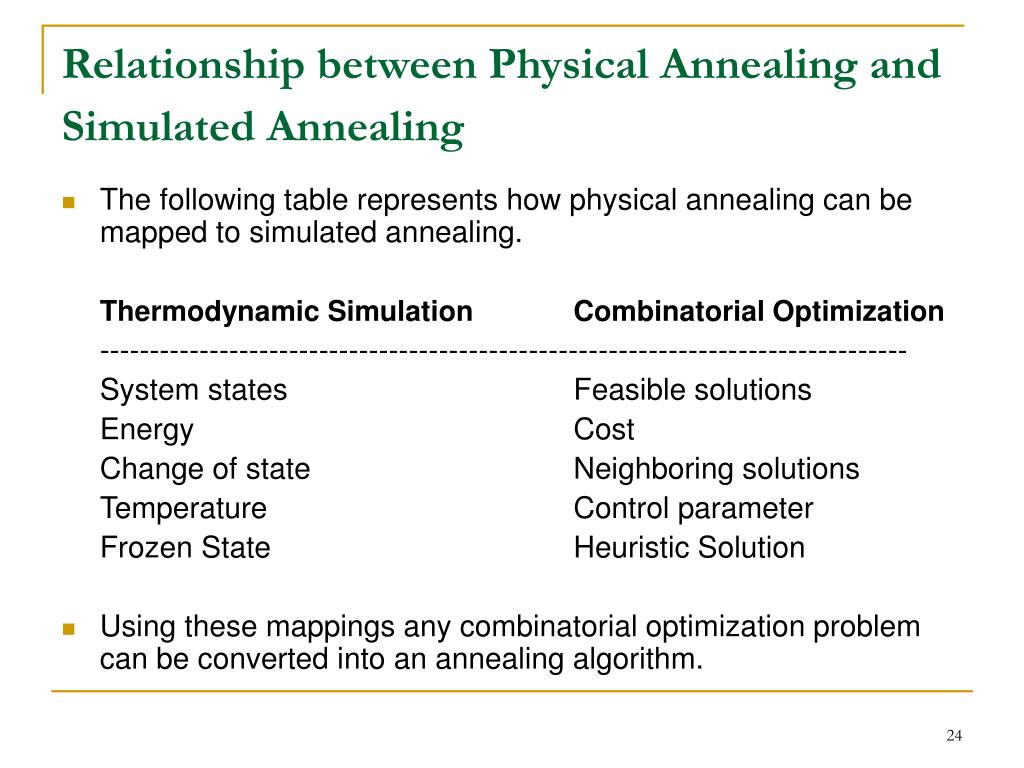

Simulated annealing uses the objective function of an optimization problem instead of the energy of a material.

Simulated annealing is based on metallurgical practices by which a material is heated to a high temperature and cooled. At high temperatures, atoms may shift unpredictably, often eliminating impurities as the material cools into a pure crystal. This is replicated via the simulated annealing optimization algorithm, with energy state corresponding to current solution.

Physical Annealing:

Another important part of this analogy is the following equation from Thermal Dynamics:

This equation calculates the probability that the Energy Magnitude will increase. We can calculate this value given some Energy Magnitude and some temperature t along with the Boltzmann constant k.

Implementation of SA is surprisingly simple. The algorithm is basically hill-climbing except instead of picking the best move, it picks a random move. If the selected move improves the solution, then it is always accepted. Otherwise, the algorithm makes the move anyway with some probability less than 1. The probability decreases exponentially with the “badness” of the move, which is the amount ΔE by which the solution is worsened (i.e., energy is increased.)

Acceptance Criteria

The law of thermodynamics state that at temperature, T, the probability of

an increase in energy of magnitude, ΔE, is given by

P(ΔE) = e−ΔE/kT

where k is a constant that relates temperature to energy known as Boltzmann’s constant.

At higher values of T, uphill moves are more likely to occur. As T tends to zero, they become more and more unlikely, until the algorithm behaves more or less like hill-climbing.

In a typical SA optimization, T starts high and is gradually decreased according to an “annealing schedule”.

A certain number of iterations are carried out at each temperature and then the temperature is decreased. This is repeated until the system freezes into a steady state.

This equation is directly used in simulated annealing, although it is usual to drop the Boltzmann constant as this was only introduced into the equation to cope with different materials. Therefore, the probability of accepting a worse state is given by the equation

P = e−ΔE/T > r

where, ΔE= the change in the evaluation function

T= the current temperature

r= a random number between 0 and 1

The probability of accepting a worse move is a function of both the temperature of the system and of the change in the cost function.

As the temperature of the system decreases the probability of accepting a worse move is decreased. This is the same as gradually moving to a frozen state in physical annealing.

Also if the temperature is zero then only better moves will be accepted which effectively makes simulated annealing act like hill climbing.

Simulated annealing is typically used in discrete, but very large, configuration spaces, such as the set of possible orders of cities in the Traveling Salesman problem and in VLSI routing. It has a broad range of application that is still being explored.

The mapping of time to temperature and how fast the temperature decreases is called the Annealing Schedule.

The

algorithm starts initially with

For any given finite problem, the probability that the simulated annealing algorithm terminates with a global optimal solution approaches 1 as the annealing schedule is extended. This theoretical result, however, is not particularly helpful, since the time required to ensure a significant probability of success will usually exceed the time required for a complete search of the solution space.

The following pseudocode presents the simulated annealing heuristic as described above. It starts from a state s0 and continues until a maximum of kmax steps have been taken. In the process, the call neighbour(s) should generate a randomly chosen neighbour of a given state s; the call random(0, 1) should pick and return a value in the range [0, 1], uniformly at random. The annealing schedule is defined by the call temperature(r), which should yield the temperature to use, given the fraction r of the time budget that has been expended so far.

E(), neighbour(), P(), temperature() s and e to sbest and ebest

and perhaps restart the annealing schedule. The decision to restart

could be based on several criteria. Notable among these include

restarting based on a fixed number of steps, based on whether the

current energy is too high compared to the best energy obtained so far,

restarting randomly, etc.

Circuit Partitioning & Placement

Hardware/Software Partitioning

Graph Partitioning

VLSI: Placement, routing

Image Processing

Strategy Scheduling for capital products with complex product structure

Umpire scheduling in US open tournament

Event-based learning situations

Practical Issues

The maximum temperature

Scheme for decreasing temperature

Strategy for proposing updates

Simulated Annealing versus Hill Climbing

REF

https://www.geeksforgeeks.org/simulated-annealing/

https://en.wikipedia.org/wiki/Simulated_annealing

https://www.sciencedirect.com/topics/engineering/simulated-annealing-algorithm

https://towardsdatascience.com/optimization-techniques-simulated-annealing-d6a4785a1de7

using System; public class Solution { public static void Main () { int T = Convert . ToInt32 ( Console . ReadLine...