1. How is data mining related to business intelligence?

- Businesses

use data mining for business intelligence and to identify specific data

that may help their companies make better leadership and management

decisions.

- Data mining experts sift through large data sets to identify trends and patterns.

- Various software packages and analytical tools can be used for data mining.

- The process can be automated or done manually.

- Data

mining allows individual workers to send specific queries for

information to archives and databases so that they can obtain targeted

results.

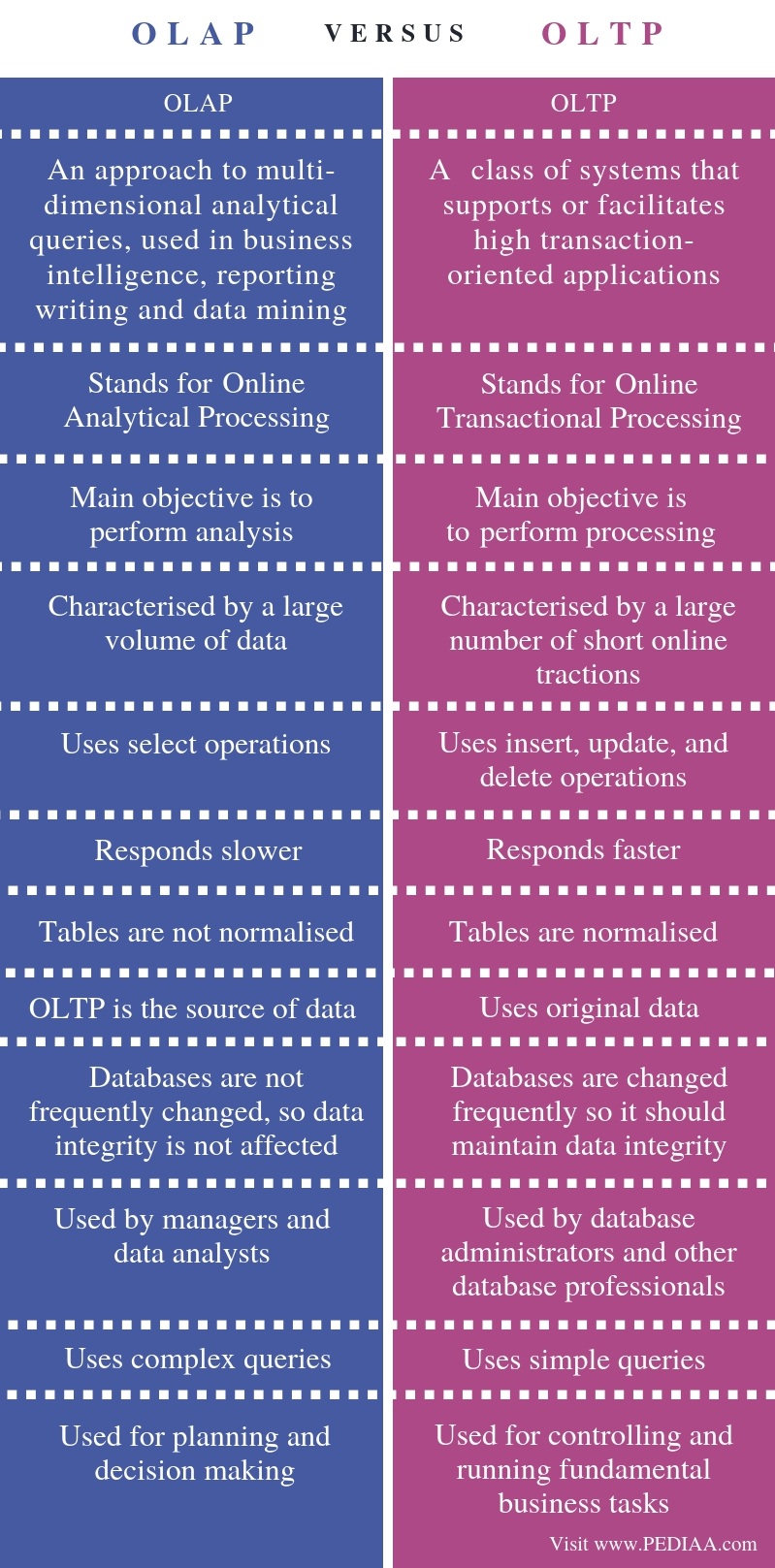

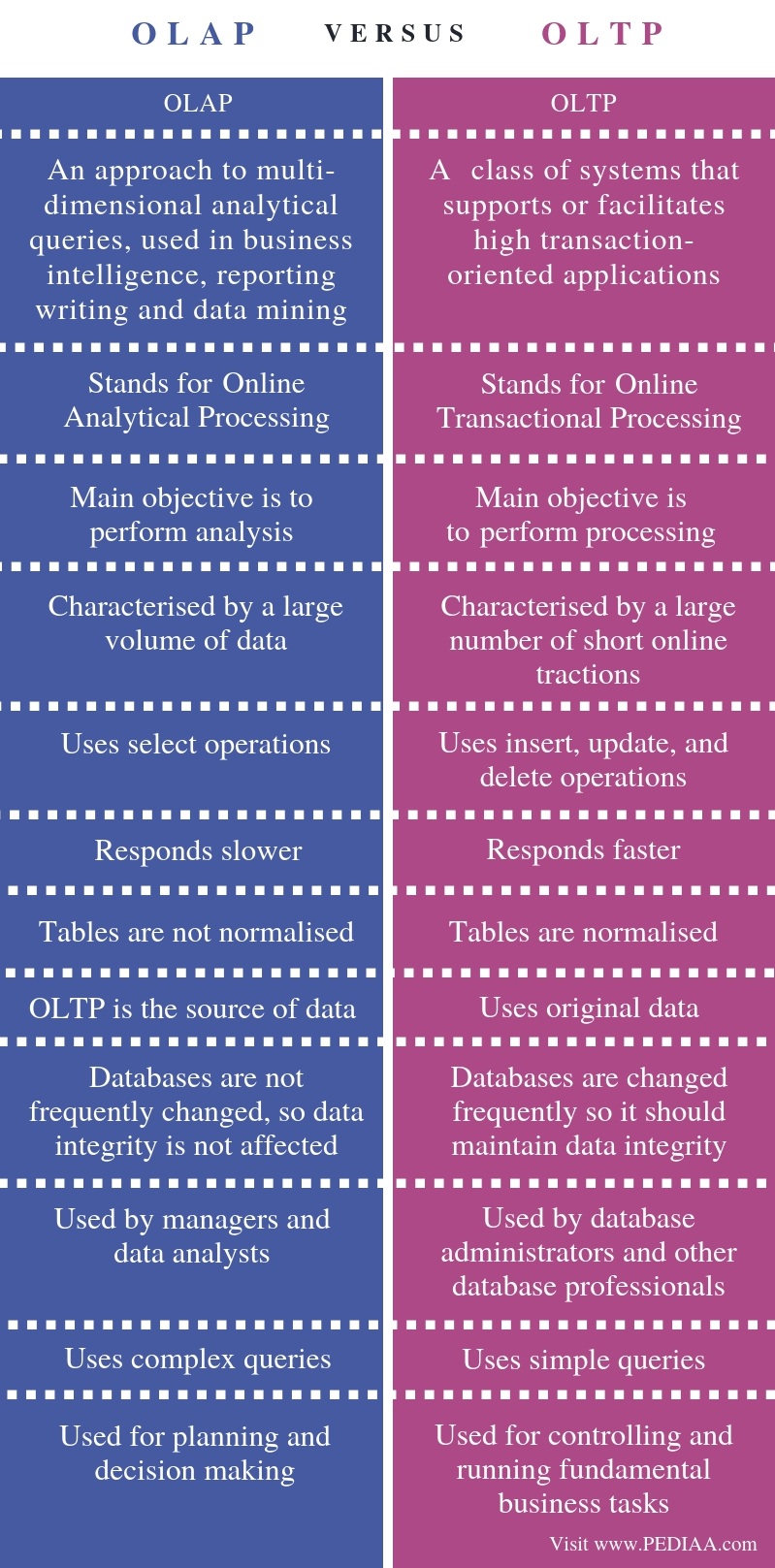

2. Differentiate between OLTP and OLAP.

3. Why do we need data transformation? What are the different ways of data transformation?

The

process of transforming the data into appropriate forms suitable for

the mining process is known as data transformation. We need data

transformation for changing the format, structure, or values of data.The different ways of data transformation are,

Smoothing - It is a process that is used to remove noise from the data set using some algorithmsAggregation - Data collection or aggregation is the method of storing and presenting data in a summary format.Normalization - It is done to scale the data values in a specified range like 0.0 to 1.0.Attribute Selection - In this strategy, new attributes are constructed from the given set of attributes to help the mining process.Generalization

- Here, low-level data are replaced with high-level data by using

concept hierarchies climbing. For Example-The attribute “city” can be

converted to “country”.Discretization - This is done to replace the raw values of a numeric attribute by ranges or conceptual levels.

4.

An airport security screening station wants to determine if passengers

are criminals or not. To do this, the faces of passengers are scanned

and kept in a database. Is this a classification or prediction task?

Justify

This is a classification task. Classification is the forms of data analysis. It helps to organise and categorise data in distinct classes.The Data Classification process includes two steps −

Building the Classifier or Model

- This step is the learning step or the learning phase.

- In this step, the classification algorithms build the classifier.

- The classifier is built from the training set made up of database tuples and their associated class labels.

- Each

tuple that constitutes the training set is referred to as a category or

class. These tuples can also be referred to as sample, object, or data

points.

Using Classifier for Classification- In this step, the classifier is used for classification.

- Here the test data is used to estimate the accuracy of classification rules.

- The classification rules can be applied to the new data tuples if the accuracy is considered acceptable.

5. Where do we use Linear regression? Explain linear regression.

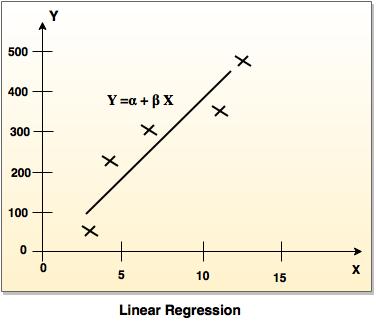

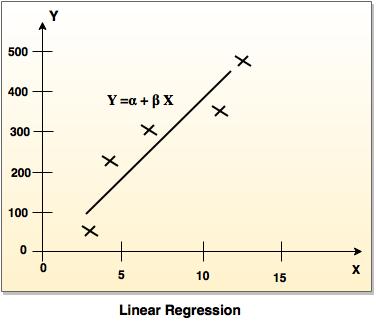

- Linear

regression is the simplest form of regression which attempts to model

the relationship between two variables by fitting a linear equation to

observe the data.

- Linear regression attempts to find the mathematical relationship between variables.

- If

the outcome is a straight line then it is considered as a linear model

and if it is a curved line, then it is a non-linear model.

- The relationship between the dependent variable is given by straight line and it has only one independent variable.

Y = α + Β XModel 'Y', is a linear function of 'X'.- The value of 'Y' increases or decreases in a linear manner according to which the value of 'X' also changes.

6. What is the significance of tree pruning in decision tree algorithms?

- Tree pruning is performed to remove anomalies in the training data due to noise or outliers.

- The pruned trees are smaller and less complex.

Tree Pruning Approaches

There are two approaches to prune a tree −Pre-pruning − The tree is pruned by halting its construction early.Post-pruning - This approach removes a sub-tree from a fully grown tree.

7. What are the two measures used for rule interestingness?

Some of the interestingness measures are,φ-coefficient: The phi-square is a measure of the correlation between two categoricalvariables in a 2 x 2 table. Its value can range from 0 (no relation between factors) to 1(perfect relation between the two factors).

Odds ratio (α): This measure represents the odds for obtaining the different outcomes

of a variable. We can use the ratio of these odds to determine the degree to which two terms

are associated with each other.

8. Given two objects represented by the tuples (22,1,42,10) and (20,0,36,8)Compute the Manhattan distance between the two objects.

d=|22-20|+|1-0|+|42-36|+|10-8| =2+1+6+2 =11

9. How density-based clustering varies from other methods?

- Most

partitioning methods cluster the objects, based on the distance between

objects. Such methods can find only spherical-shaped clusters and

encounter difficulty in discovering clusters of arbitrary shapes.

- Density-based clustering methods have been developed based on the notion of density.

- Based on connectivity and density functions

- Typical methods: DBSACN, OPTICS, DenClue

10. Differentiate web content mining and web structure mining.

Web content mining: - Web content mining is the application of extracting useful information from the content of the web documents.

- Web

content consists of several types of data – text, image, audio, video

etc. It can provide effective and interesting patterns about user

needs.

- Text

documents are related to text mining, machine learning and natural

language processing. This mining is also known as text mining.

Web structure mining:- Web structure mining is the application of discovering structure information from the web.

- The structure of the web graph consists of web pages as nodes and hyperlinks as edges connecting related pages.

- Structure mining basically shows the structured summary of a particular website.

- It identifies the relationship between web pages linked by information or direct link connection.

11. How is data warehouse different from a database? How are they similar?

Differences

- Database

is a collection of related data that represents some elements of the

real world whereas Data warehouse is an information system that stores

historical and commutative data from single or multiple sources.

- Database is designed to record data whereas the Data warehouse is designed to analyze data.

- Database is application-oriented-collection of data whereas Data Warehouse is the subject-oriented collection of data.

- Database uses Online Transactional Processing (OLTP) whereas Data warehouse uses Online Analytical Processing (OLAP).

Similarities - The

similarity between data warehouse and database is that both the systems

maintain data in the form of table, indexes, columns, views, and keys.

- Also, data is retrieved in both by using SQL queries.

12. Compare star and snowflake schema dimension table.

13. Explain the attribute selection method in decision trees.

If

the dataset consists of N attributes then deciding which attribute to

place at the root or at different levels of the tree as internal nodes

is a complicated step. So we use some criteria like :Entropy, Information gain, Gain Ratio,

14. Distinguish between hold out method and cross-validation method.

Hold-out

is when you split up your dataset into a ‘train’ and ‘test’ set. The

training set is what the model is trained on, and the test set is used

to see how well that model performs on unseen data. The hold-out method

is good to use when you have a very large dataset,

Cross-validation

or ‘k-fold cross-validation’ is when the dataset is randomly split up

into ‘k’ groups. One of the groups is used as the test set and the rest

are used as the training set. The model is trained on the training set

and scored on the test set. Then the process is repeated until each

unique group as been used as the test set.

15. Explain prepruning and postpruning approaches in decision tree algorithm.

Pre-pruning that stop growing the tree earlier, before it perfectly classifies the training set.Post-pruning that allows the tree to perfectly classify the training set, and then post prune the tree.

16. Differentiate between support and confidence.

Support is an indication of how frequently the itemset appears in the dataset.Confidence is an indication of how often the rule has been found to be true.

17. How to compute the dissimilarity between objects described by binary variables

- A binary variable has only two states: 0 or 1, where 0 means that the variable is absent, and 1 means that it is present.

- Treating binary variables as if they are interval-scaled can lead to misleading clustering results.

- Therefore, methods specific to binary data are necessary for computing dissimilarities.

- One approach involves computing a dissimilarity matrix from the given binary data.

- If all binary variables are thought of as having the same weight, we have the 2-by-2 contingency table

- Businesses use data mining for business intelligence and to identify specific data that may help their companies make better leadership and management decisions.

- Data mining experts sift through large data sets to identify trends and patterns.

- Various software packages and analytical tools can be used for data mining.

- The process can be automated or done manually.

- Data mining allows individual workers to send specific queries for information to archives and databases so that they can obtain targeted results.

2. Differentiate between OLTP and OLAP.

3. Why do we need data transformation? What are the different ways of data transformation?

The

process of transforming the data into appropriate forms suitable for

the mining process is known as data transformation. We need data

transformation for changing the format, structure, or values of data.

The different ways of data transformation are,

Smoothing - It is a process that is used to remove noise from the data set using some algorithms

Aggregation - Data collection or aggregation is the method of storing and presenting data in a summary format.

Normalization - It is done to scale the data values in a specified range like 0.0 to 1.0.

Attribute Selection - In this strategy, new attributes are constructed from the given set of attributes to help the mining process.

Generalization

- Here, low-level data are replaced with high-level data by using

concept hierarchies climbing. For Example-The attribute “city” can be

converted to “country”.

Discretization - This is done to replace the raw values of a numeric attribute by ranges or conceptual levels.

4.

An airport security screening station wants to determine if passengers

are criminals or not. To do this, the faces of passengers are scanned

and kept in a database. Is this a classification or prediction task?

Justify

- This step is the learning step or the learning phase.

- In this step, the classification algorithms build the classifier.

- The classifier is built from the training set made up of database tuples and their associated class labels.

- Each tuple that constitutes the training set is referred to as a category or class. These tuples can also be referred to as sample, object, or data points.

- In this step, the classifier is used for classification.

- Here the test data is used to estimate the accuracy of classification rules.

- The classification rules can be applied to the new data tuples if the accuracy is considered acceptable.

5. Where do we use Linear regression? Explain linear regression.

- Linear regression is the simplest form of regression which attempts to model the relationship between two variables by fitting a linear equation to observe the data.

- Linear regression attempts to find the mathematical relationship between variables.

- If the outcome is a straight line then it is considered as a linear model and if it is a curved line, then it is a non-linear model.

- The relationship between the dependent variable is given by straight line and it has only one independent variable.

Y = α + Β X

Model 'Y', is a linear function of 'X'.

- The value of 'Y' increases or decreases in a linear manner according to which the value of 'X' also changes.

- Tree pruning is performed to remove anomalies in the training data due to noise or outliers.

- The pruned trees are smaller and less complex.

Tree Pruning Approaches

There are two approaches to prune a tree −

Pre-pruning − The tree is pruned by halting its construction early.

Post-pruning - This approach removes a sub-tree from a fully grown tree.

φ-coefficient: The phi-square is a measure of the correlation between two categorical

variables in a 2 x 2 table. Its value can range from 0 (no relation between factors) to 1

(perfect relation between the two factors).

8. Given two objects represented by the tuples (22,1,42,10) and (20,0,36,8)

Compute the Manhattan distance between the two objects.

d=|22-20|+|1-0|+|42-36|+|10-8|

=2+1+6+2 =11

- Most partitioning methods cluster the objects, based on the distance between objects. Such methods can find only spherical-shaped clusters and encounter difficulty in discovering clusters of arbitrary shapes.

- Density-based clustering methods have been developed based on the notion of density.

- Based on connectivity and density functions

- Typical methods: DBSACN, OPTICS, DenClue

- Web content mining is the application of extracting useful information from the content of the web documents.

- Web content consists of several types of data – text, image, audio, video etc. It can provide effective and interesting patterns about user needs.

- Text documents are related to text mining, machine learning and natural language processing. This mining is also known as text mining.

Web structure mining:

- Web structure mining is the application of discovering structure information from the web.

- The structure of the web graph consists of web pages as nodes and hyperlinks as edges connecting related pages.

- Structure mining basically shows the structured summary of a particular website.

- It identifies the relationship between web pages linked by information or direct link connection.

11. How is data warehouse different from a database? How are they similar?

Differences

- Database is a collection of related data that represents some elements of the real world whereas Data warehouse is an information system that stores historical and commutative data from single or multiple sources.

- Database is designed to record data whereas the Data warehouse is designed to analyze data.

- Database is application-oriented-collection of data whereas Data Warehouse is the subject-oriented collection of data.

- Database uses Online Transactional Processing (OLTP) whereas Data warehouse uses Online Analytical Processing (OLAP).

Similarities

- The similarity between data warehouse and database is that both the systems maintain data in the form of table, indexes, columns, views, and keys.

- Also, data is retrieved in both by using SQL queries.

12. Compare star and snowflake schema dimension table.

13. Explain the attribute selection method in decision trees.

If

the dataset consists of N attributes then deciding which attribute to

place at the root or at different levels of the tree as internal nodes

is a complicated step. So we use some criteria like :

14. Distinguish between hold out method and cross-validation method.

Hold-out

is when you split up your dataset into a ‘train’ and ‘test’ set. The

training set is what the model is trained on, and the test set is used

to see how well that model performs on unseen data. The hold-out method

is good to use when you have a very large dataset,

Cross-validation

or ‘k-fold cross-validation’ is when the dataset is randomly split up

into ‘k’ groups. One of the groups is used as the test set and the rest

are used as the training set. The model is trained on the training set

and scored on the test set. Then the process is repeated until each

unique group as been used as the test set.

15. Explain prepruning and postpruning approaches in decision tree algorithm.

Pre-pruning that stop growing the tree earlier, before it perfectly classifies the training set.

Post-pruning that allows the tree to perfectly classify the training set, and then post prune the tree.

16. Differentiate between support and confidence.

Support is an indication of how frequently the itemset appears in the dataset.

Confidence is an indication of how often the rule has been found to be true.

17. How to compute the dissimilarity between objects described by binary variables

- A binary variable has only two states: 0 or 1, where 0 means that the variable is absent, and 1 means that it is present.

- Treating binary variables as if they are interval-scaled can lead to misleading clustering results.

- Therefore, methods specific to binary data are necessary for computing dissimilarities.

- One approach involves computing a dissimilarity matrix from the given binary data.

- If all binary variables are thought of as having the same weight, we have the 2-by-2 contingency table